Azkaban workflow manager + eboshi

16 Feb 2016

Some days ago we looked for some scheduler for execute our MapReduce jobs. We chose Azkaban workflow manager and eboshi (because we wanted autodeploy via Salt Stack). Now I want to write about our experiences with this project.

- mysql

- azkaban-webserver

- azkaban-executor

- eboshi

We edited azkaban-webserver and azkaban-executor start scripts for Daemontools.

azkaban-web-start.sh

@@ -45,7 +45,5 @@

fi

AZKABAN_OPTS="$AZKABAN_OPTS -server -Dcom.sun.management.jmxremote -Djava.io.tmpdir=$tmpdir -Dexecutorport=$executorport -Dserverpath=$serverpath"

-java $AZKABAN_OPTS $JAVA_LIB_PATH -cp $CLASSPATH azkaban.webapp.AzkabanWebServer -conf $azkaban_dir/conf $@ &

-

-echo $! > $azkaban_dir/currentpid

+exec java $AZKABAN_OPTS $JAVA_LIB_PATH -cp $CLASSPATH azkaban.webapp.AzkabanWebServer -conf $azkaban_dir/conf $@ >> $azkaban_dir/log/azkaban-web-`date +%F`.log

When we had azkaban webserver and executor online, we created some flows for our job. Like this budgetupdatercpi-r.job

# budgetupdater cpi daily reaggregation job

type=command

dependencies=impressfraud-r

command=/etc/init.d/szn-sklik-budgetupdater daily-azkaban cpi ${date}

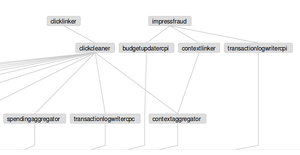

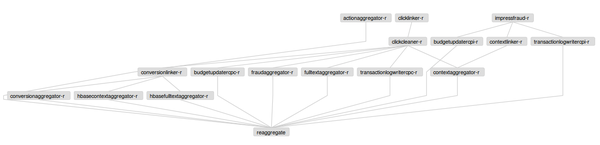

With help of these defined files we can create extensive flow like this

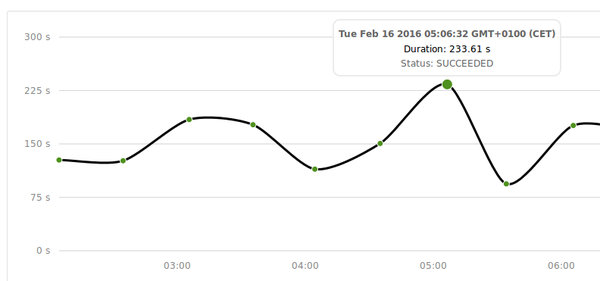

History graph of some flow executions look like this. This is a really good helper when your jobs execution time incomprehensible rises.

eboshi and autodeploy

Because we have self signed certificate for azkaban webserver we had to edit every requests call in eboshi.

-r = requests.post(url + "/schedule", data=params)

+r = requests.post(url + "/schedule", verify=False, data=params)

The schedules are created automatically from this pillar

scheduler:

clicklinkerspark:

time: 00,02,AM,CET

period: 1d

clicklinkerspark-inc:

time: 00,02,AM,CET

period: 30m

coeccompressor-daily:

time: 00,57,AM,CET

period: 1d

patternwebsaggregator:

time: 06,02,AM,CET

period: 1d

This salt state calls eboshi and creates all scheludes defined in pillar in this for loop.

{% for flow, metadata in salt['pillar.get']('scheduler', {}).iteritems() %}

{{ flow }}-create-schedule:

cmd.run:

- name: /usr/local/bin/eboshi addSchedule --url https://{{ grains['fqdn'] }}:8443 --username admins6 --password {{ pillar['pass.azkaban.admins6'] }} --project sklik --flow {{ flow }} --date 01/01/2016 --time {{ metadata['time'] }} --period {{ metadata['period'] }}

{% endfor %}

At pillar are defined versions of packages our jobs. Nowadays when we want edit/add some schedule we only edit pillar file and call salt "HOSTNAME" state.highstate. This call update our package and set all schedules.